All Categories

Featured

Can you ask trainees just how they are presently using generative AI devices? What clarity will trainees need to identify between ideal and unacceptable usages of these tools? Think about just how you might readjust assignments to either integrate generative AI into your program, or to determine areas where pupils may lean on the modern technology, and transform those hot areas right into chances to encourage deeper and extra critical reasoning.

Be open to remaining to learn even more and to having ongoing discussions with coworkers, your department, people in your discipline, and even your students about the effect generative AI is having - How does AI affect education systems?.: Decide whether and when you desire trainees to utilize the innovation in your courses, and plainly connect your specifications and expectations with them

Be clear and straight about your expectations. We all wish to discourage students from making use of generative AI to complete assignments at the cost of discovering critical skills that will certainly influence their success in their majors and occupations. We would certainly additionally such as to take some time to concentrate on the opportunities that generative AI presents.

We likewise advise that you think about the accessibility of generative AI tools as you explore their potential uses, especially those that trainees might be called for to engage with. Ultimately, it is very important to take into consideration the moral factors to consider of using such tools. These topics are fundamental if thinking about utilizing AI tools in your task design.

Our goal is to support professors in boosting their mentor and learning experiences with the newest AI modern technologies and devices. As such, we look ahead to providing various possibilities for professional development and peer knowing. As you additionally discover, you may want CTI's generative AI occasions. If you desire to check out generative AI beyond our offered sources and events, please reach out to schedule an appointment.

How Does Ai Benefit Businesses?

I am Pinar Seyhan Demirdag and I'm the co-founder and the AI director of Seyhan Lee. Throughout this LinkedIn Knowing course, we will speak about exactly how to utilize that tool to drive the creation of your intention. Join me as we dive deep right into this brand-new imaginative revolution that I'm so fired up regarding and let's find with each other exactly how each of us can have an area in this age of innovative technologies.

A semantic network is a way of refining information that mimics biological neural systems like the connections in our very own brains. It's just how AI can build links among apparently unrelated collections of info. The principle of a neural network is carefully pertaining to deep discovering. Just how does a deep knowing version make use of the neural network idea to attach information points? Beginning with how the human mind works.

These nerve cells make use of electrical impulses and chemical signals to connect with each other and transfer info between various locations of the brain. An artificial semantic network (ANN) is based on this organic phenomenon, however formed by artificial nerve cells that are made from software modules called nodes. These nodes utilize mathematical calculations (instead of chemical signals as in the mind) to connect and transfer info.

Ai In Daily Life

A large language version (LLM) is a deep learning version educated by applying transformers to a large set of generalised data. Ethical AI development. Diffusion versions find out the process of transforming a natural image into blurry visual sound.

Deep knowing versions can be described in specifications. A straightforward credit prediction design educated on 10 inputs from a car loan application form would have 10 specifications. By comparison, an LLM can have billions of parameters. OpenAI's Generative Pre-trained Transformer 4 (GPT-4), one of the structure versions that powers ChatGPT, is reported to have 1 trillion criteria.

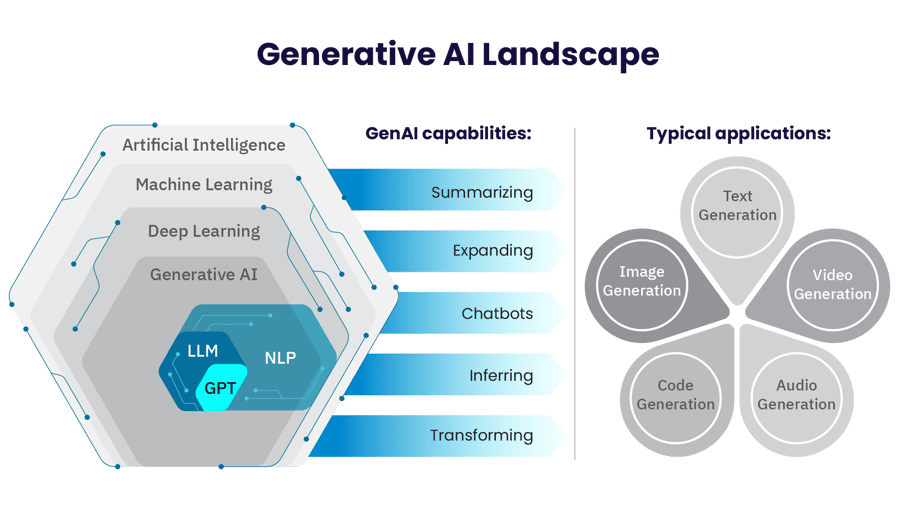

Generative AI describes a category of AI algorithms that create brand-new results based upon the data they have been trained on. It uses a type of deep learning called generative adversarial networks and has a large range of applications, consisting of producing photos, message and audio. While there are concerns concerning the impact of AI at work market, there are likewise prospective benefits such as releasing up time for humans to focus on more imaginative and value-adding work.

Enjoyment is building around the possibilities that AI tools unlock, yet what exactly these tools are capable of and how they work is still not widely understood (How do autonomous vehicles use AI?). We can cover this in detail, but provided exactly how sophisticated devices like ChatGPT have actually become, it only seems best to see what generative AI has to claim regarding itself

Without more trouble, generative AI as explained by generative AI. Generative AI technologies have actually exploded right into mainstream consciousness Photo: Visual CapitalistGenerative AI refers to a category of fabricated knowledge (AI) formulas that generate brand-new outcomes based on the data they have actually been educated on.

In straightforward terms, the AI was fed details regarding what to blog about and afterwards produced the write-up based on that information. To conclude, generative AI is a powerful device that has the possible to reinvent several industries. With its capability to create new web content based upon existing information, generative AI has the possible to alter the method we develop and take in web content in the future.

Cybersecurity Ai

Some of one of the most popular architectures are variational autoencoders (VAEs), generative adversarial networks (GANs), and transformers. It's the transformer architecture, initial received this influential 2017 paper from Google, that powers today's big language models. However, the transformer architecture is much less matched for other sorts of generative AI, such as photo and audio generation.

The encoder compresses input data into a lower-dimensional space, called the unexposed (or embedding) space, that protects one of the most important elements of the information. A decoder can after that utilize this pressed depiction to rebuild the original information. As soon as an autoencoder has been trained in this way, it can make use of unique inputs to generate what it takes into consideration the suitable outputs.

The generator makes every effort to develop practical information, while the discriminator intends to distinguish between those produced outputs and genuine "ground fact" results. Every time the discriminator captures a produced result, the generator utilizes that comments to attempt to boost the high quality of its results.

When it comes to language versions, the input includes strings of words that compose sentences, and the transformer anticipates what words will certainly follow (we'll enter into the information below). Additionally, transformers can refine all the components of a sequence in parallel rather than marching through it from starting to end, as earlier types of versions did; this parallelization makes training much faster and more efficient.

All the numbers in the vector stand for different facets of the word: its semantic significances, its partnership to other words, its frequency of usage, and so on. Comparable words, like elegant and elegant, will certainly have comparable vectors and will also be near each other in the vector space. These vectors are called word embeddings.

When the version is creating text in feedback to a prompt, it's using its anticipating powers to determine what the next word needs to be. When producing longer pieces of text, it predicts the next word in the context of all words it has written thus far; this feature increases the comprehensibility and continuity of its writing.

Latest Posts

How Is Ai Used In Sports?

Edge Ai

Image Recognition Ai